Call Quality Control Tool for Americor

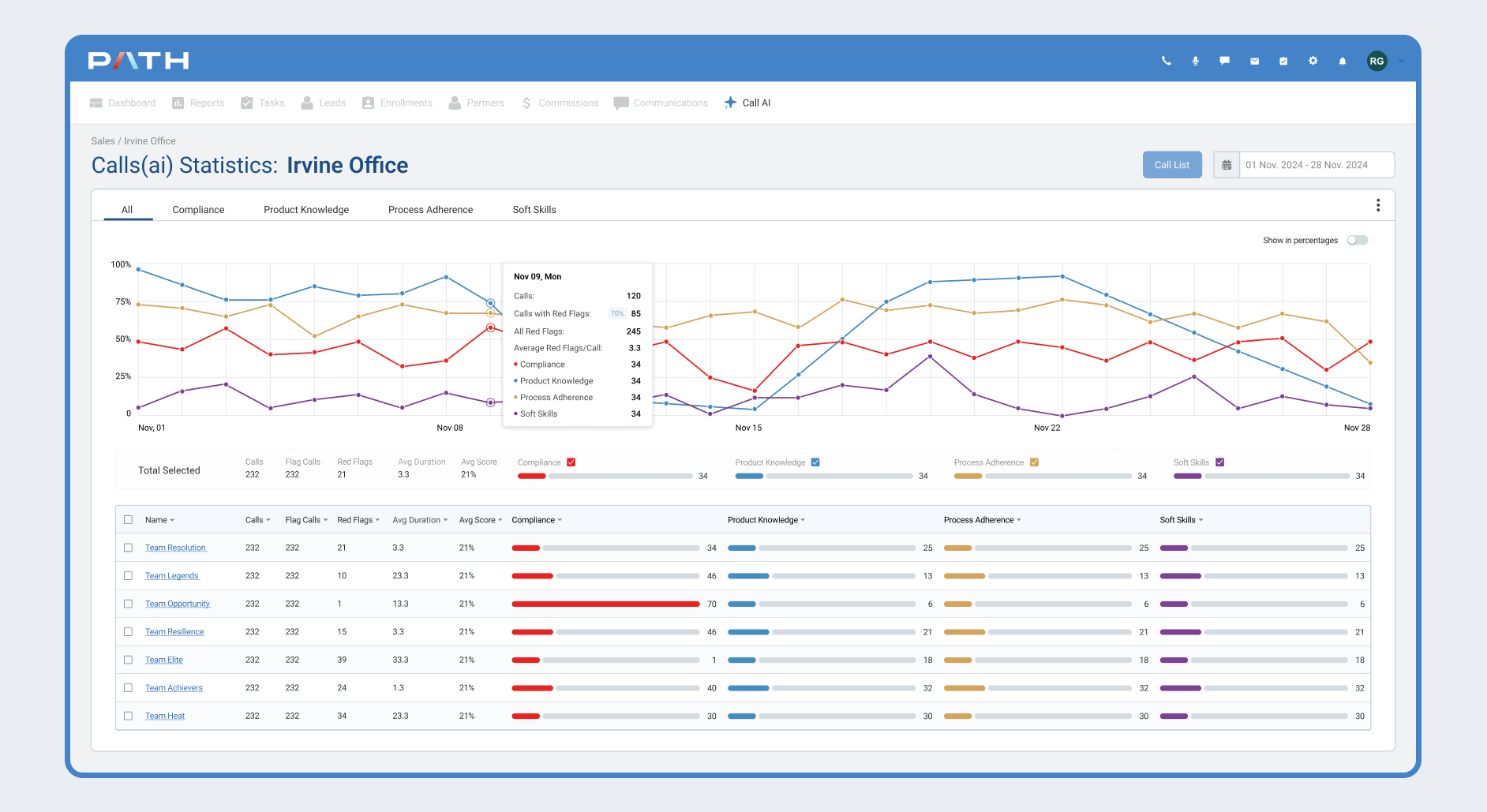

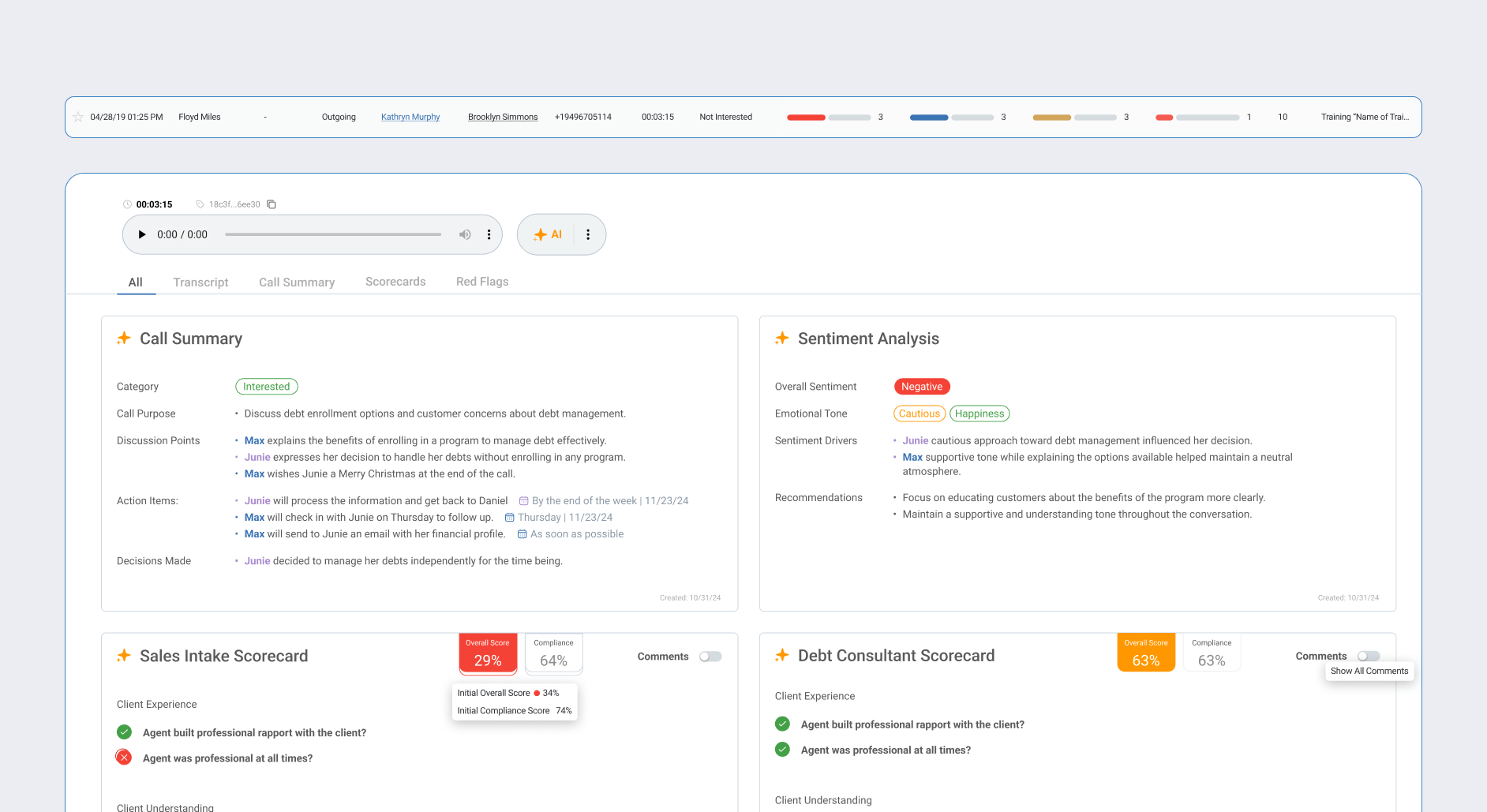

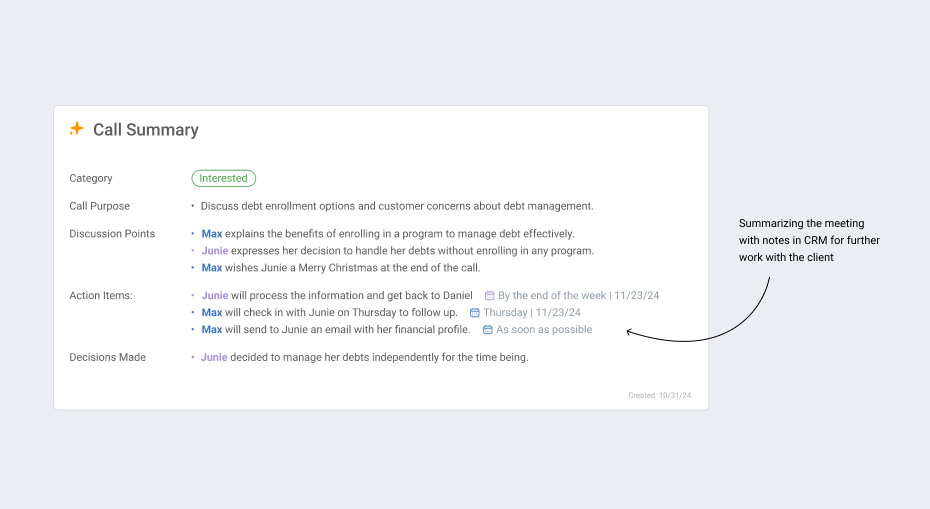

To improve the quality of sales calls and increase the Contact-to-Enrollment ratio, we developed a scalable call quality control system for Americor. Previously, the entire review process was manual: managers listened to random recordings without any structured flagging or issue visualization system. This new tool became the first attempt to systematize call evaluation and automate error detection. We started with deep research into user roles and pain points, which shaped our design approach and prioritization.

We began by analyzing the current workflow and pain points across the sales, compliance, and analytics teams. Key issues included:

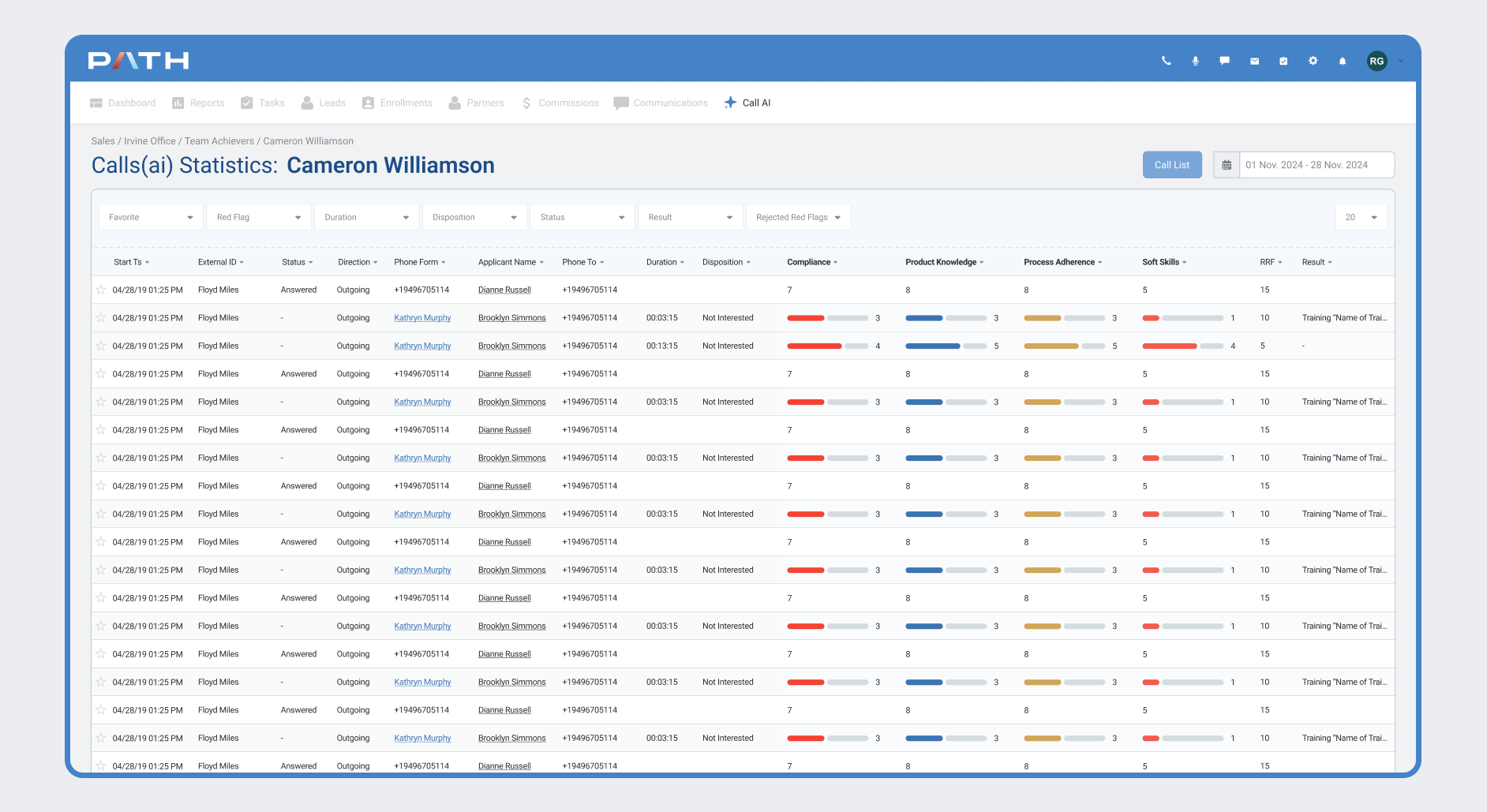

- No centralized dashboard to track red-flagged calls

- No prioritization: hard to tell which calls need review

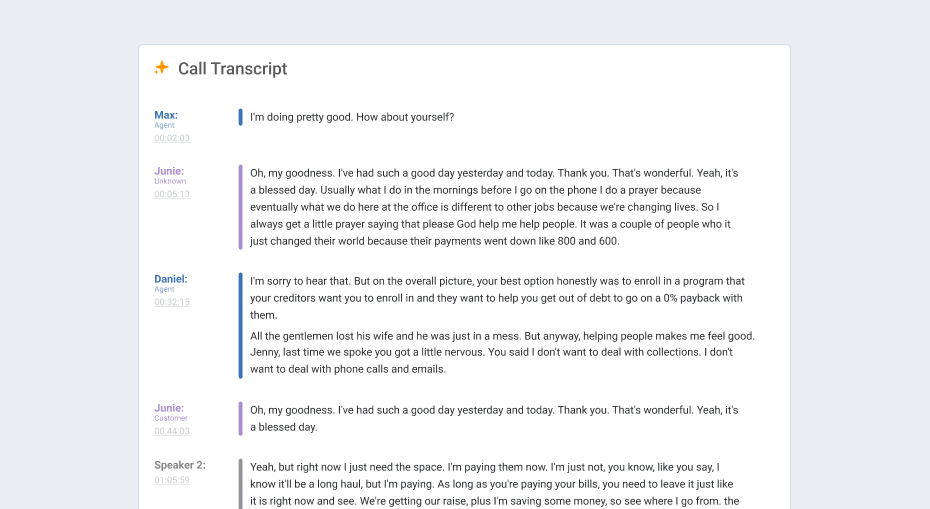

- Inability to quickly listen to a flagged call and review a transcript

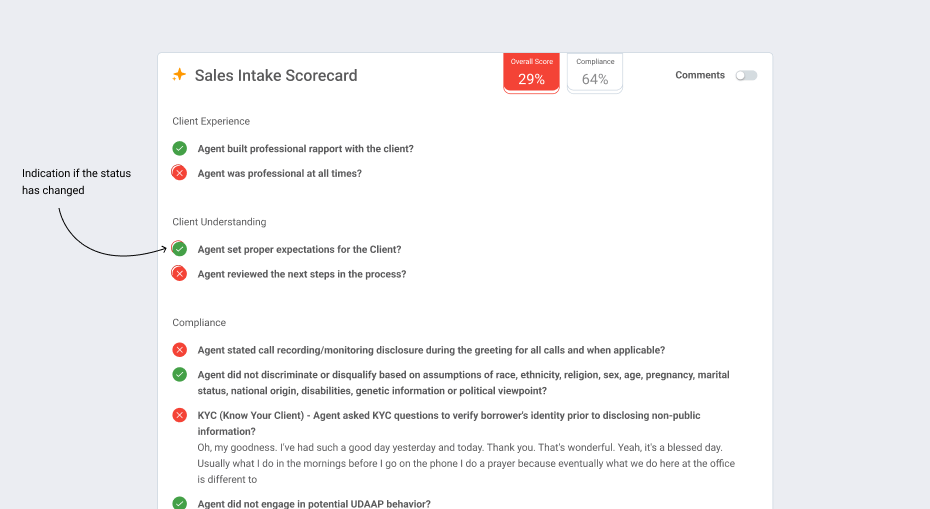

- Lack of trust in AI-scoring logic

- Unclear who is responsible for confirming or dismissing flags

- No historical view of confirmed/dismissed flags

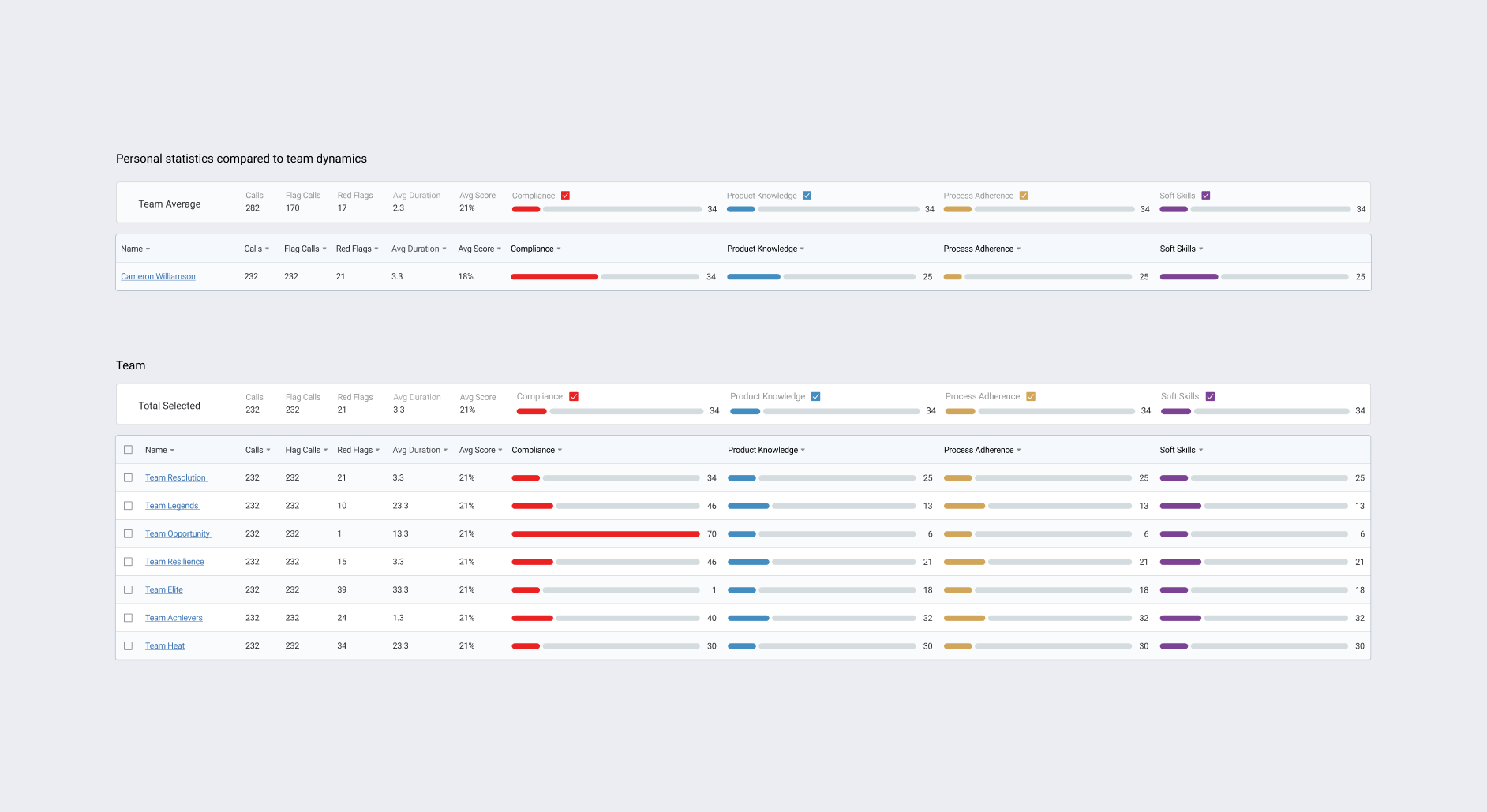

- No access to personal red flags and scoring breakdowns

- Unclear reasons for low-scoring calls

- No feedback loop or self-coaching system

- Unconfirmed calls remain in "Unvalidated" status

- No reports segmented by flag type, team, or channel

- No customer journey visualization across interactions

- Fragmented data across Five9, CRM, and Scorecards

- Compliance team struggles to identify recurring violations

This research laid the foundation for our product hypotheses, architecture, and initial delivery plan.

Based on the internal presentation, we defined the following vision and assumptions:

- Automatically generated red flags will increase transparency and scalability

- Visualizing flagged calls will help managers act faster

- Manual confirmation/dismissal will build trust in AI scoring and close the feedback loop

- Sales Director

- Sales Manager

- Debt Consultant

- Compliance Officer

.png)

.jpg)

.png)

.png)

Throughout development, we realized the tool shouldn't feel punitive, but motivational. It should help consultants recognize their weak spots and improve, not feel surveilled.

We documented open product questions: Who should confirm flags—direct managers or any reviewer? How should we distinguish confirmed vs. unconfirmed in the UI? These questions guided UX logic and access permissions.